causaLens Benchmarks 20× Higher Reliability Than OpenAI on Complex Knowledge Tasks

causaLens Benchmarks 20× Higher Reliability Than OpenAI on Complex Knowledge Tasks

TL;DR:

-

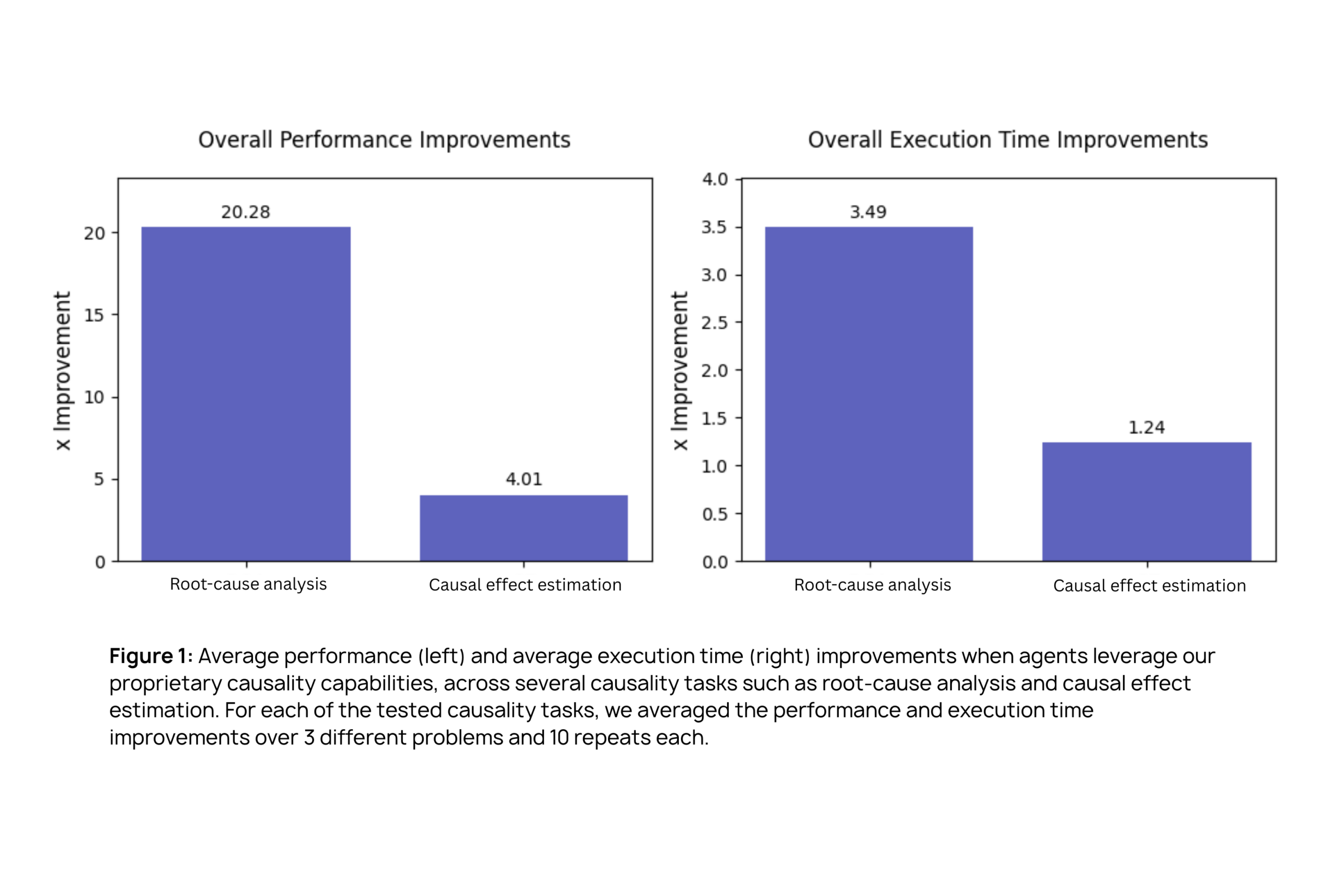

Up to 20× performance gains on complex, causality-heavy tasks (root-cause analysis + causal effect estimation).

-

Up to 3× faster execution than OpenAI’s agents

-

Measured via ground-truth synthetic benchmarks across multiple scenarios and iterations for enterprise readiness.

Traditional AI agents simply aren’t built for the kind of high-value, white-collar knowledge work that powers modern enterprises - they falter when real-world complexity and scale step in. For years, organizations have wrestled with AI agents that can’t deliver consistent results in high-stakes use cases. By synthesizing deep tech with a highly scalable architecture, we’ve created Digital Workers that can automate the most mission-critical of workflows with much greater determinism. In recent benchmarks, our Digital Workers proved to be 3x more reliable than OpenAI models in operational workloads and up to 20x more reliable ones that require causal reasoning.

To make these results concrete, we outline the benchmarking methodology used to evaluate performance and reliability. The following section shows how these systems were tested across increasingly complex knowledge-work scenarios - and why they are ready for enterprise deployment.

The Methodology & Benchmarking Process

Proving breakthrough reliability naturally demands rigorous validation - so we set out to objectively benchmark our Digital Workers against industry-standard models across the most demanding tasks enterprises face. Here’s how we did it:

Benchmarking Setup

The heart of our study was to test agent workflows equipped with our proprietary causality mechanisms, comparing their performance and execution speed against OpenAI’s standard agents.

Experimental Design:

- Task Focus: Two classes of causality-heavy tasks - Root-Cause Analysis (RCA) and Causal Effect Estimation (CE).

- Data Generation: We synthesized datasets with fully known ground truths, ensuring absolute objectivity in measuring performance. Each dataset was informed by realistic scenarios including pharmaceutical manufacturing variances, quality control, and marketing attribution, containing between 10 and 16 variables per problem and 10,000 samples each.

- Agent Inputs:

- Baseline agents: Provided only with synthetic data and a problem description including inputs and/or outlier events.

- Augmented agents (our Digital Workers): Supplied with all baseline inputs plus access to a Causality MCP (Module for Causal Processing), relevant causal graphs, and occasionally explicit causal models.

The setup was repeated for three distinct problem instances per task, each scenario rerun 10 times to assess both average performance and variability.

Causality Tasks in Detail

- Root-Cause Analysis (RCA):

Agents were challenged to identify the factors responsible for anomalous outcomes - like pinpointing which operational variable caused a production yield failure. Each RCA problem required submission of a ranked list of potential root causes for thousands of synthetic outlier events. Performance was measured using top-3 accuracy, ensuring that the most likely causes surfaced reliably at the top of each result. Real-world inspired challenges, such as distinguishing direct from indirect causal effects, made these tasks especially tough for conventional models.

- Causal Effect Estimation (CE):

Here, agents needed to calculate the influence of multiple treatment or intervention variables on outcomes - mirroring scenarios like estimating how education investments affect income, or how ad spend moves the needle on revenue. Performance was measured with scaled root-mean-squared-error (Scaled RMSE), providing a clear, quantitative metric for proximity to ground truth.

Technical Execution & Metrics

- Performance Metrics: RCA tasks used top-3 accuracy rates, while CE tasks measured error via Scaled RMSE. These metrics isolated each agent’s ability to reason causally, not just correlate data.

- Execution Time Tracking: For each problem, agents' average execution time was meticulously logged to identify technology-driven efficiency gains.

- Repeatability & Statistical Significance: All results represent averages across 3 problems and 10 iterations, smoothing out anomalies and highlighting true technical advantage.

- Comparative Analysis: Results for Digital Workers using our technology were directly compared to non-causal baselines to isolate the impact of our unique architecture.

Results: Unprecedented Gains

- Performance Improvement: Our Digital Workers demonstrated an average performance boost ranging from 4x up to 20x over baseline agents - especially pronounced in advanced reasoning use cases where simple correlation falls flat.

- Operational Reliability: In real-world-like, day-to-day use cases, the technology reached up to 3x better reliability than OpenAI models - even without our proprietary causal technology.

- Speed: Crucially, these gains weren’t offset by slower performance. Average execution times improved by 1.25x to 3.5x, thanks to better decision paths informed by causal understanding.

Example Scenarios:

- In one RCA challenge, baseline models scored 0% accuracy for the second problem - a reminder of the limits of traditional AI - while our Digital Workers identified correct root causes thanks to access to causal graphs and advanced reasoning modules.

- In CE tasks, confounding variables were navigated successfully, revealing true drivers of outcomes rather than misleading correlations.

Conclusion

Digital Workers are redefining what’s possible for enterprises seeking scalable, reliable AI in high-value knowledge work. By fusing advanced capabilities - including causal reasoning, self-healing, learning from traces, and judgment-based agents - with human-in-the-loop oversight and expert deployment, we deliver solutions that perform where others fall short. The result is not just improved accuracy, but transformative operational confidence at scale.

If you’re ready to elevate reliability and unlock new levels of productivity, we invite you to discover more about our Digital Workers or contact us to arrange a demo.

Reliable Digital Workers

causaLens builds reliable Digital Workers for high-stakes decisions in regulated industries.