Self-Improving Digital Workers: Agentic Improvement via Autonomous Learning Loop

Self-Improving Digital Workers: Agentic Improvement via Autonomous Learning Loop

The Challenge

Though it can be hard to admit, leaders know that their organization is terrifyingly dependent on tacit knowledge. It’s the kind that lives in the heads of a few experienced people and the only “documentation” is stale Slack threads and half-remembered meeting notes. New hires spend months absorbing. By the time they’ve mastered it, they’re too busy to document it — and when they move on, the learning resets.

Digital workers should offer a better path.

But off-the-shelf agents built on LLMs don’t learn either. Once deployed, they’re static: they respond, but they don’t adapt. They handle inputs, but they don’t improve. Like junior employees frozen on day one, they repeat the same costly mistakes until an expensive AI engineer dives in to fix the problem.

When workers — human or artificial — don’t learn from experience, organizations face:

- Institutional knowledge trapped in individual minds, not embedded in systems

- Repetitive mistakes that never resolve without manual intervention

- Missed opportunities to optimize workflows through accumulated experience

- Expensive training, retraining, and re-engineering labor — human or digital

Agents that don’t learn are like expensive interns who never get better.

The rise of generative AI has made digital labor viable for the first time — but static agents miss the opportunity. The organizations that figure out self-improvement first will compound operational advantages others can’t catch.

Otherwise, the pattern will continue – critical knowledge walks out the door, productivity stalls, and high-value teams spend time on avoidable rework. Static systems lead to mounting maintenance costs, missed revenue opportunities, and increased operational risk.

The causaLens Solution: Digital Workers That Get Better Every Day

At causaLens, we’re building self-improving AI-based digital workers — agents that get better over time through an autonomous learning loop. These agents don’t just execute workflows. They monitor their own performance and adjust their behavior as they go, learning what works, what doesn’t, and how to adapt.

This learning loop allows digital workers to improve with every interaction. They refine how they collect data, communicate with systems (and people), and make decisions — all without human reprogramming. Over time, they absorb institutional know-how that was previously impossible to codify.

Self-improving digital workers capture the tacit knowledge your business runs on — and embed it into repeatable workflows.

Self-improving agents offer unique, compounding value to organizations:

- Trust: Results get more reliable over time as agents learn from past performance and avoid repeating mistakes.

- Scale: The human effort required to monitor or maintain digital workers drops sharply, freeing teams from constant retraining or intervention.

- Adaptability: As conditions, data sources, or expectations change, agents adjust in place — learning from the new context without starting from zero.

Our reusable blueprints let us deploy these self-improving agents in days, not months — unlocking workflows that were too complex or variable to automate before. And unlike static agents, they don’t decay — they get better, every day they work.

How digital workers can learn and improve

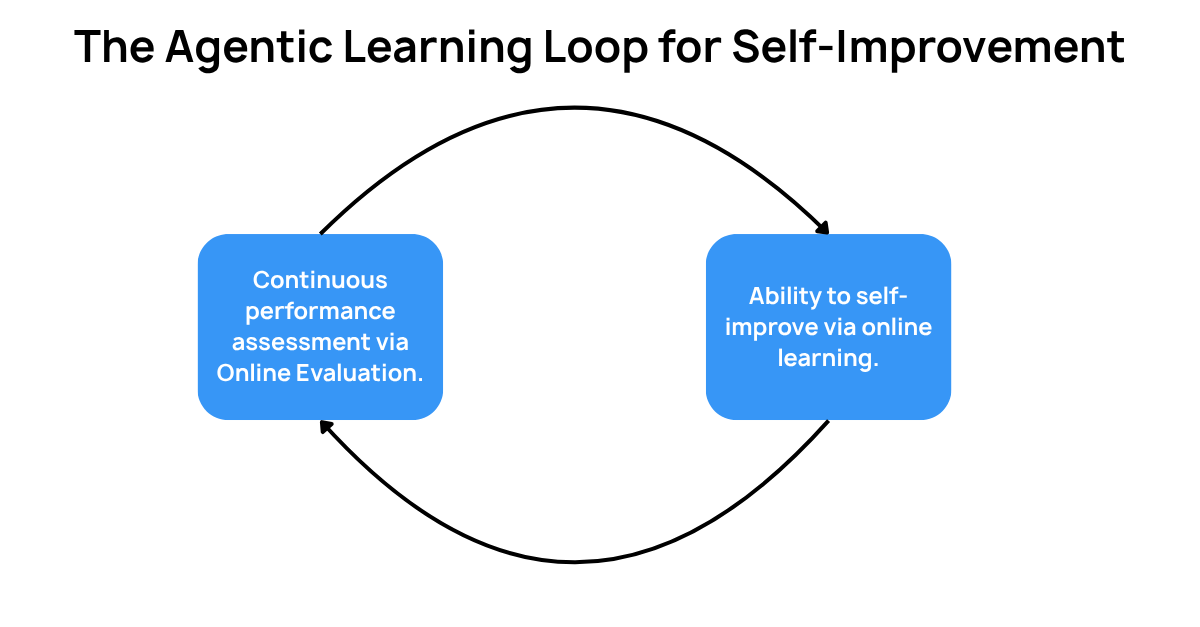

Since digital workers aren’t self-improving out-of-the-box, here at causaLens, we’re investing in agentic self-improvement. In order to self-improve, agents need to be equipped with an autonomous learning loop, which has two components.

First, agents must be able to continuously evaluate their own performance and also take action to improve their performance. These capabilities are called online evaluation and online learning in the academic literature.

Agents evaluating their own performance:

- Track success in real-time for every interaction, evaluating how accurately and efficiently they were able to complete the assigned task

- Score performance on their own, using AI judges to evaluate how well they’ve performed

And agents who can improve their performance:

- Find patterns in failures to identify opportunities for self-improvement

- Propose and/or implement improvements based on failures. These improvements can be completely autonomous or proposed by the agent and approved by users and/or developers.

For a self-improving agent, every assignment is a learning opportunity — and a chance to turn tacit knowledge into operational muscle.

There are three ways agents can compute success or failure of an interaction in online evaluation:

- Explicit feedback: request feedback from the user (e.g. thumbs up/down).

- Implicit Success Signals: evaluate whether the user was satisfied quickly (fulfillment), only after some back-and-forth (continuation/reformation), or gave up (abandonment).

- AI Critics: use an AI “judge” to review an interaction and score success. This can be particularly useful for evaluating performance in a single step of a longer interaction.

How independently agents can improve is highly use case dependent. We can figure out the right answer for your use case.

After deployment, there are two opportunities for online learning:

- Adding examples or rules: few-shot learning – deriving correct behavior from a small set of positive examples – is frequently used to train agents. Agents can be granted the capability to add to their own few-shot repositories by deriving positive examples from unsuccessful interactions, improving performance over time.

- Self-adjusting policy: digital workers in enterprise context frequently feature extensive policies and prompts for how to respond to users and determine the correct behavior. Agents can be given the ability to interpret observed successes or failures in a reinforcement learning framework and recommend or undertake adjustments to their own policies and prompts to make better outcomes more likely next time.

Depending on the digital worker and its context, there are a few different potential ways to complete the learning loop and improve the agent.

- Agent categorization: in the simplest case, agents can review the output of their self-evaluation, providing categorization of patterns that are common failure cases, along with suggestions of where more examples, policies, or prompts might be useful.

- Agent proposal: agents can take self-improvement one step further to propose changes to examples, policies, or prompts that may be implemented only with human approval.

Fully-autonomous self-improvement: in certain contexts, agents may be able to update policies and prompts from every interaction, or in a batch mode after a certain number of examples.

What it looks like in action

A top executive search firm struggled to assign the right person to pitch clients across 200+ inbound opportunities per month. causaLens built a digital worker who could handle assignments on the fly.

It wasn’t possible to encode every rule the agent needed to know ahead of time. Some needed to be learned on the fly.

A key challenge was codifying assignment rules that lived only in the minds of senior staff. High-priority pitches required seniority. Some team members needed ride-alongs for C-suite searches. Others were developing new verticals and should be assigned more often than their track record suggested.

But not all rules could be captured upfront — many would emerge only through use. Roles would evolve. New hires would arrive. Some knowledge had to be learned on the fly.

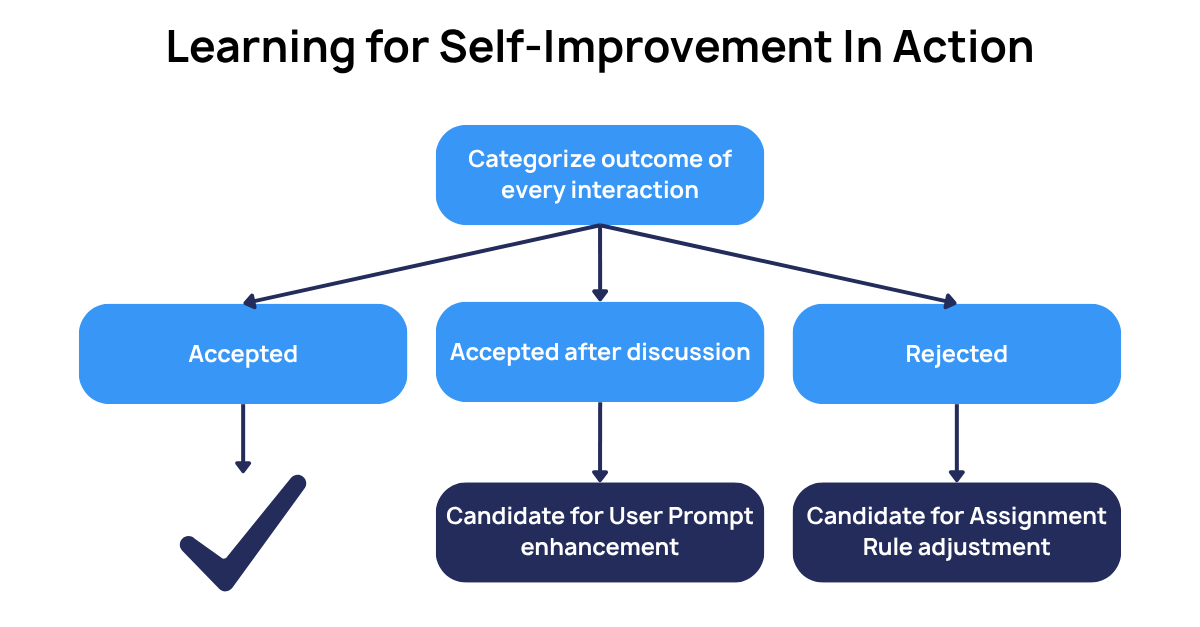

The digital worker was built for exactly this. From day one, it performed online evaluation — tracking whether its assignments were accepted immediately, accepted after discussion, or rejected outright.

These signals fed two self-improvement loops:

- Rule Discovery Loop: When overruled, the agent flagged missing logic and, after enough examples, proposed rule updates.

- Interaction Loop: When decisions required back-and-forth, it updated its output prompts to automatically include frequently requested context.

These self-improvement features weren’t just technically interesting — they were mission-critical. It’s only via self-improvement that the digital worker was able to turn the tacit judgement of experienced staff into op

And the payoff is obvious: less reliance on bottlenecked expertise, faster handoffs, and decisions that get better over time — not stale.

The winners in this wave of enterprise AI won’t be the ones who deploy agents — they’ll be the ones whose agents learn.

causaLens makes that possible today.